엘지노 프로젝트

12.31 공부노트 본문

http://cs231n.github.io/linear-classify/

데이터 노멀라이즈 하는 이유 -> 학습 속도 빠르게 하기 위해

regularize 하는 이유

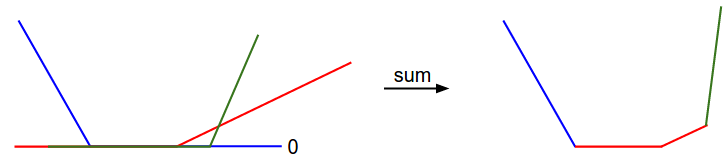

SVM에서 W가 유니크 하지 않음

-> we wish to encode some preference for a certain set of weights W over others to remove this ambiguity.

In addition to the motivation we provided above there are many desirable properties to include the regularization penalty. For example, it turns out that including the L2 penalty leads to the appealing max margin property in SVMs.

The most appealing property is that penalizing large weights tends to improve generalization, because it means that no input dimension can have a very large influence on the scores all by itself.

w1 = [1 , 0, 0, 0], w2 = [0.25, 0.25, 0.25, 0.25]

The final classifier is encouraged to take into account all input dimensions to small amounts rather than a few input dimensions and very strongly.

lead to less overfitting.

Information theory view. The cross-entropy between a “true” distribution and an estimated distribution is defined as:

The Softmax classifier is hence minimizing the cross-entropy between the estimated class probabilities ( as seen above) and the “true” distribution, which in this interpretation is the distribution where all probability mass is on the correct class (i.e. contains a single 1 at the -th position.). Moreover, since the cross-entropy can be written in terms of entropy and the Kullback-Leibler divergence as , and the entropy of the delta function is zero, this is also equivalent to minimizing the KL divergence between the two distributions (a measure of distance). In other words, the cross-entropy objective wants the predicted distribution to have all of its mass on the correct answer.

'Log > Daily' 카테고리의 다른 글

| 2018 회고, 2019 목표 (0) | 2019.01.01 |

|---|